Trying to install python beautifulsoup for the purpose of web scraping. Unfortunately, pip installed it successfully but module cannot be located when called.

C:\pip install beautifulsoup4

You are using pip version 6.0.6, however version 7.1.0 is available.

You should consider upgrading via the 'pip install --upgrade pip' command.

Collecting beautifulsoup4

Downloading beautifulsoup4-4.4.0-py2-none-any.whl (81kB)

100% |################################| 81kB 152kB/s ta 0:00:011

Installing collected packages: beautifulsoup4

Successfully installed beautifulsoup4-4.4.0

C:\pip install BeautifulSoup

You are using pip version 6.0.6, however version 7.1.0 is available.

You should consider upgrading via the 'pip install --upgrade pip' command.

Collecting BeautifulSoup

Downloading BeautifulSoup-3.2.1.tar.gz

Installing collected packages: BeautifulSoup

Running setup.py install for BeautifulSoup

Successfully installed BeautifulSoup-3.2.1

C:\>

Traceback (most recent call last):

File "C:\Scrap1.py", line 1, in <module>

from bs4 import BeautifulSoup

ImportError: No module named bs4

>>>

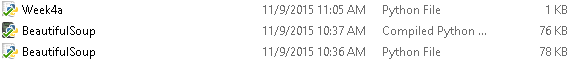

Download and install it manually.

C:\beautifulsoup4-4.4.0\beautifulsoup4-4.4.0>python setup.py install

C:\Python27\lib\distutils\dist.py:267: UserWarning: Unknown distribution option: 'use_2to3'

warnings.warn(msg)

running install

running bdist_egg

running egg_info

writing requirements to beautifulsoup4.egg-info\requires.txt

writing beautifulsoup4.egg-info\PKG-INFO

writing top-level names to beautifulsoup4.egg-info\top_level.txt

writing dependency_links to beautifulsoup4.egg-info\dependency_links.txt

reading manifest file 'beautifulsoup4.egg-info\SOURCES.txt'

reading manifest template 'MANIFEST.in'

writing manifest file 'beautifulsoup4.egg-info\SOURCES.txt'

installing library code to build\bdist.win-amd64\egg

running install_lib

running build_py

creating build

creating build\lib

creating build\lib\bs4

copying bs4\dammit.py -> build\lib\bs4

copying bs4\diagnose.py -> build\lib\bs4

copying bs4\element.py -> build\lib\bs4

copying bs4\testing.py -> build\lib\bs4

copying bs4\__init__.py -> build\lib\bs4

creating build\lib\bs4\builder

copying bs4\builder\_html5lib.py -> build\lib\bs4\builder

copying bs4\builder\_htmlparser.py -> build\lib\bs4\builder

copying bs4\builder\_lxml.py -> build\lib\bs4\builder

copying bs4\builder\__init__.py -> build\lib\bs4\builder

creating build\lib\bs4\tests

copying bs4\tests\test_builder_registry.py -> build\lib\bs4\tests

copying bs4\tests\test_docs.py -> build\lib\bs4\tests

copying bs4\tests\test_html5lib.py -> build\lib\bs4\tests

copying bs4\tests\test_htmlparser.py -> build\lib\bs4\tests

copying bs4\tests\test_lxml.py -> build\lib\bs4\tests

copying bs4\tests\test_soup.py -> build\lib\bs4\tests

copying bs4\tests\test_tree.py -> build\lib\bs4\tests

copying bs4\tests\__init__.py -> build\lib\bs4\tests

creating build\bdist.win-amd64

creating build\bdist.win-amd64\egg

creating build\bdist.win-amd64\egg\bs4

creating build\bdist.win-amd64\egg\bs4\builder

copying build\lib\bs4\builder\_html5lib.py -> build\bdist.win-amd64\egg\bs4\builder

copying build\lib\bs4\builder\_htmlparser.py -> build\bdist.win-amd64\egg\bs4\builder

copying build\lib\bs4\builder\_lxml.py -> build\bdist.win-amd64\egg\bs4\builder

copying build\lib\bs4\builder\__init__.py -> build\bdist.win-amd64\egg\bs4\builder

copying build\lib\bs4\dammit.py -> build\bdist.win-amd64\egg\bs4

copying build\lib\bs4\diagnose.py -> build\bdist.win-amd64\egg\bs4

copying build\lib\bs4\element.py -> build\bdist.win-amd64\egg\bs4

copying build\lib\bs4\testing.py -> build\bdist.win-amd64\egg\bs4

creating build\bdist.win-amd64\egg\bs4\tests

copying build\lib\bs4\tests\test_builder_registry.py -> build\bdist.win-amd64\egg\bs4\tests

copying build\lib\bs4\tests\test_docs.py -> build\bdist.win-amd64\egg\bs4\tests

copying build\lib\bs4\tests\test_html5lib.py -> build\bdist.win-amd64\egg\bs4\tests

copying build\lib\bs4\tests\test_htmlparser.py -> build\bdist.win-amd64\egg\bs4\tests

copying build\lib\bs4\tests\test_lxml.py -> build\bdist.win-amd64\egg\bs4\tests

copying build\lib\bs4\tests\test_soup.py -> build\bdist.win-amd64\egg\bs4\tests

copying build\lib\bs4\tests\test_tree.py -> build\bdist.win-amd64\egg\bs4\tests

copying build\lib\bs4\tests\__init__.py -> build\bdist.win-amd64\egg\bs4\tests

copying build\lib\bs4\__init__.py -> build\bdist.win-amd64\egg\bs4

byte-compiling build\bdist.win-amd64\egg\bs4\builder\_html5lib.py to _html5lib.pyc

byte-compiling build\bdist.win-amd64\egg\bs4\builder\_htmlparser.py to _htmlparser.pyc

byte-compiling build\bdist.win-amd64\egg\bs4\builder\_lxml.py to _lxml.pyc

byte-compiling build\bdist.win-amd64\egg\bs4\builder\__init__.py to __init__.pyc

byte-compiling build\bdist.win-amd64\egg\bs4\dammit.py to dammit.pyc

byte-compiling build\bdist.win-amd64\egg\bs4\diagnose.py to diagnose.pyc

byte-compiling build\bdist.win-amd64\egg\bs4\element.py to element.pyc

byte-compiling build\bdist.win-amd64\egg\bs4\testing.py to testing.pyc

byte-compiling build\bdist.win-amd64\egg\bs4\tests\test_builder_registry.py to test_builder_registry.pyc

byte-compiling build\bdist.win-amd64\egg\bs4\tests\test_docs.py to test_docs.pyc

byte-compiling build\bdist.win-amd64\egg\bs4\tests\test_html5lib.py to test_html5lib.pyc

byte-compiling build\bdist.win-amd64\egg\bs4\tests\test_htmlparser.py to test_htmlparser.pyc

byte-compiling build\bdist.win-amd64\egg\bs4\tests\test_lxml.py to test_lxml.pyc

byte-compiling build\bdist.win-amd64\egg\bs4\tests\test_soup.py to test_soup.pyc

byte-compiling build\bdist.win-amd64\egg\bs4\tests\test_tree.py to test_tree.pyc

byte-compiling build\bdist.win-amd64\egg\bs4\tests\__init__.py to __init__.pyc

byte-compiling build\bdist.win-amd64\egg\bs4\__init__.py to __init__.pyc

creating build\bdist.win-amd64\egg\EGG-INFO

copying beautifulsoup4.egg-info\PKG-INFO -> build\bdist.win-amd64\egg\EGG-INFO

copying beautifulsoup4.egg-info\SOURCES.txt -> build\bdist.win-amd64\egg\EGG-INFO

copying beautifulsoup4.egg-info\dependency_links.txt -> build\bdist.win-amd64\egg\EGG-INFO

copying beautifulsoup4.egg-info\requires.txt -> build\bdist.win-amd64\egg\EGG-INFO

copying beautifulsoup4.egg-info\top_level.txt -> build\bdist.win-amd64\egg\EGG-INFO

zip_safe flag not set; analyzing archive contents...

creating dist

creating 'dist\beautifulsoup4-4.4.0-py2.7.egg' and adding 'build\bdist.win-amd64\egg' to it

removing 'build\bdist.win-amd64\egg' (and everything under it)

Processing beautifulsoup4-4.4.0-py2.7.egg

Copying beautifulsoup4-4.4.0-py2.7.egg to c:\python27\lib\site-packages

Adding beautifulsoup4 4.4.0 to easy-install.pth file

Installed c:\python27\lib\site-packages\beautifulsoup4-4.4.0-py2.7.egg

Processing dependencies for beautifulsoup4==4.4.0

Finished processing dependencies for beautifulsoup4==4.4.0

Tested and no more error.

C:\>python

Python 2.7 (r27:82525, Jul 4 2010, 07:43:08) [MSC v.1500 64 bit (AMD64)] on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> from bs4 import BeautifulSoup

>>> quit()

But lxml failling now when running my python web scrapper codes.

Traceback (most recent call last):

File "C:\Scrap1.py", line 31, in <module>

categories = get_category_links(food_n_drink)

File "C:\Scrap1.py", line 12, in get_category_links

soup = make_soup(section_url)

File "C:\Scrap1.py", line 9, in make_soup

return BeautifulSoup(html, "lxml")

File "build\bdist.win-amd64\egg\bs4\__init__.py", line 156, in __init__

% ",".join(features))

FeatureNotFound: Couldn't find a tree builder with the features you requested: lxml. Do you need to install a parser library?

>>>

C:\>pip install --upgrade lxml

You are using pip version 6.0.6, however version 7.1.0 is available.

You should consider upgrading via the 'pip install --upgrade pip' command.

Collecting lxml

Downloading lxml-3.4.4-cp27-none-win_amd64.whl (3.3MB)

100% |################################| 3.3MB 660kB/s ta 0:00:01

Installing collected packages: lxml

Successfully installed lxml-3.4.4

C:\>pip install cssselect

You are using pip version 6.0.6, however version 7.1.0 is available.

You should consider upgrading via the 'pip install --upgrade pip' command.

Collecting cssselect

Downloading cssselect-0.9.1.tar.gz

Installing collected packages: cssselect

Running setup.py install for cssselect

Successfully installed cssselect-0.9.1

Reinstalling did not take care of it.

Traceback (most recent call last):

File "C:\Scrap1.py", line 31, in <module>

categories = get_category_links(food_n_drink)

File "C:\Scrap1.py", line 12, in get_category_links

soup = make_soup(section_url)

File "C:\Scrap1.py", line 9, in make_soup

return BeautifulSoup(html, "lxml")

File "build\bdist.win-amd64\egg\bs4\__init__.py", line 156, in __init__

% ",".join(features))

FeatureNotFound: Couldn't find a tree builder with the features you requested: lxml. Do you need to install a parser library?

>>>

Download and install it manually fixed my issue.